By Nic Wise*

I've long had an interest and fascination with how infrastructure works. All the bits of kit and equipment which we take for granted, but most people don't even notice.

Water delivery? Check. Hydro power stations? Sign me up. Transmission grids? Sure. Wikipedia the heck out of that.

(image from Jillian Hancock)

So, I jumped at the opportunity to have a look around the Chorus Fibre Lab.

For those not in NZ, Chorus was split out from Telecom NZ, which was the old "Post Office" up until the 80s. In the 90s, Telecom was split into retail (Telecom, now Spark) and wholesale (Chorus), with Chorus running the copper network. When the fibre rollout got underway in the late 2000's, Chorus was a major player in the build out in a number of areas, including Auckland.

The Fibre Lab was built before the fibre rollout really kicked off, as a way to explain to people (ahem mostly to politicians) why we needed a fibre to the home (FTTH) network with that much bandwidth.

So thanks to Brent, who works for Chorus, some of us who beta tested the roll out of the new Quic network got a tour and to ask a bunch of, well, super geeky questions of people who I presume are used to very non-geeky questions.

First up, thanks to Brent and Bobby for the tour. It was awesome :)

The lab itself is a combination of "this is what you can do in your home" - think big 8K TVs, Wi-Fi 7, lots of IoT - and "this is how we roll out a network". There is a working, production exchange in one of the rooms, and lots of other networks to compare - 5G, 4G, Starlink, Fibre, etc.

The part I found the most interesting, though, was the fibre build out and what all the bits are. I have a really good mental model of layer 3 - IP, how a packet goes from my router to the wider internet. The bit I'm missing is how it goes from my router - physically - to the ISP. I can see the cable outside on the road, but what happens there??

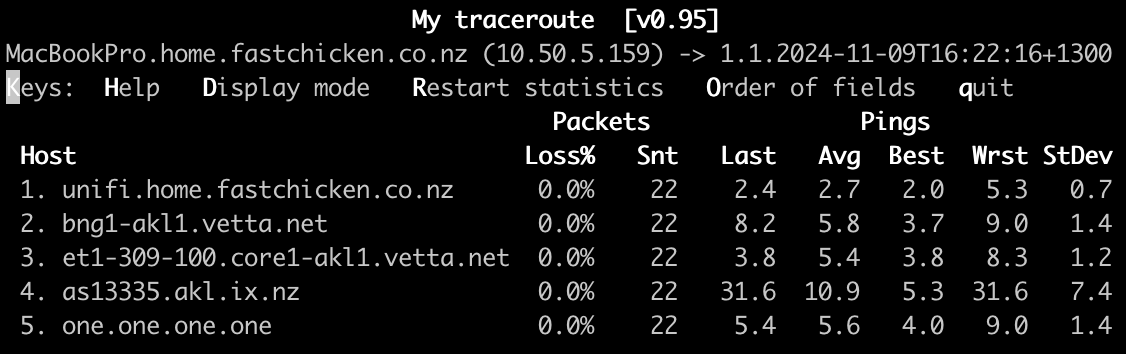

So that's what I'm going to focus on here - lets trace things from my router thru to the BNG (Border Network Gateway) at Quic, which is the next hop for an IP packet.

Step 1 - Getting to the exchange

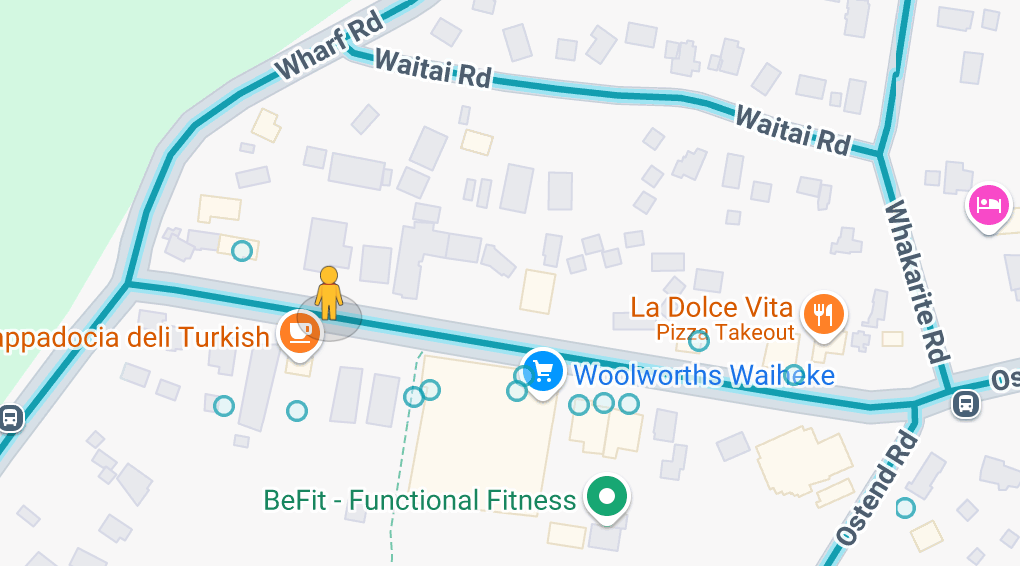

I live on Waiheke, and we have about 9000 full time residents, and that peaks at about 45,000 in summer. We have a phone exchange here, as most places this size do. The exchange is in Belgium St, which is about 700 metres away from my house, right next to Woolworths and the Auckland Council offices.

Fun fact: Chorus has a spare, unused 768-fibre cable - about 5 cm across - going from Waiheke to Auckland in case the Waiheke Exchange burns down. Backups of backups. Lots of redundancy when it takes weeks or months to deploy physical equipment.

It's kind of obvious when you go past - but my fibre line doesn't run straight there.

First hop is from my ONT (Optical Network Termination - the box on the wall) to the power pole outside. The pole connects back to a pit which splits the single optical connection 16 ways. I'm pretty sure I'm the only one on this splitter, but there is often a lot more. The same light goes down each path from the splitter, but the ONT is setup to only be able to receive the data specific for this location.

There are lots of splitters - every UFB pit has three of them - and while GPON (the underlying fibre technology) can have split rations of up to 64, Chorus uses 1:16.

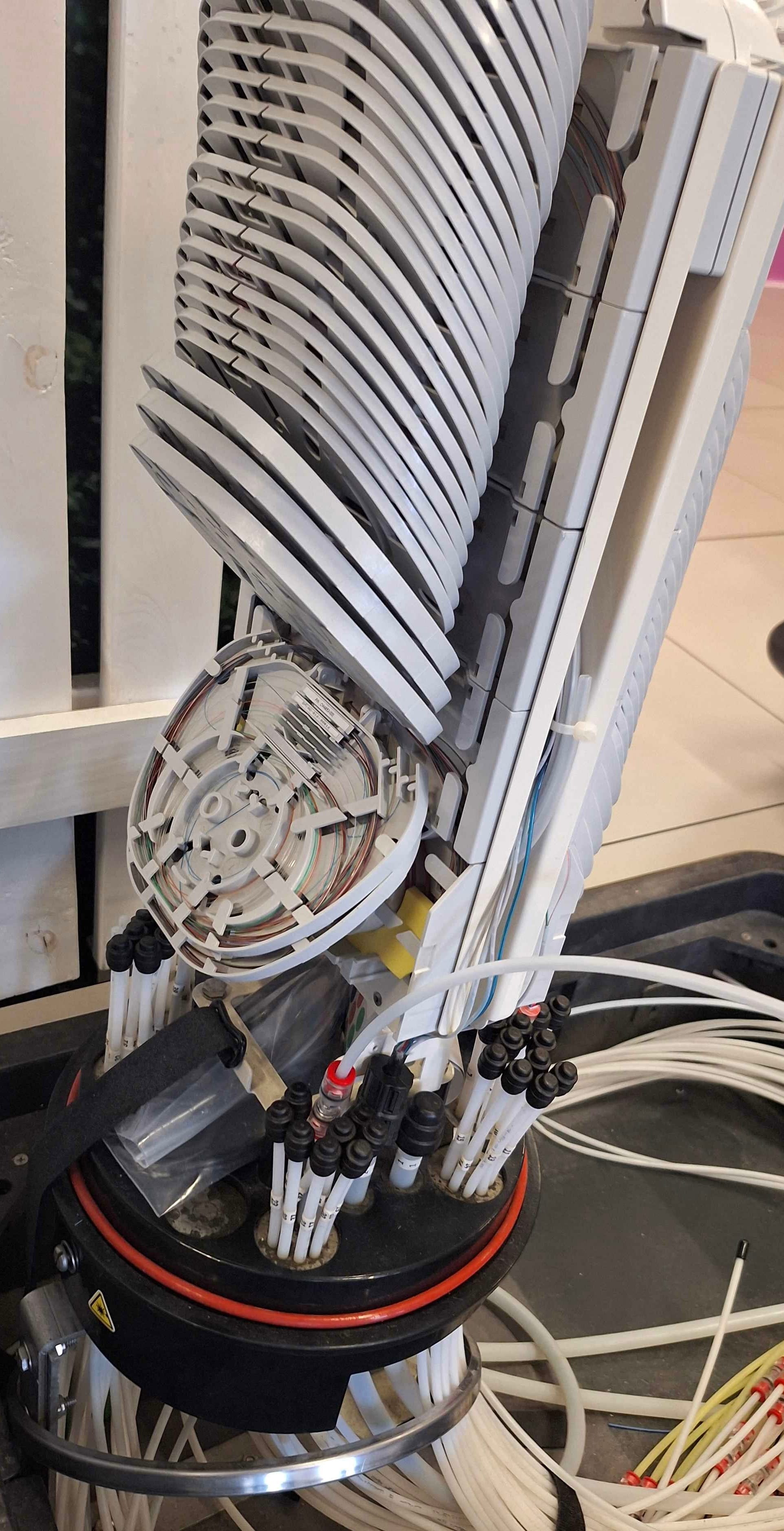

Tracing that back to the bottom of my road is a "Airblown Fibre Flexibility point", or Pit, which for me looks like a manhole / water mains cover at the end of my road. This has a stack of fibre connections and splitters, and connects back to the exchange over a small group of fibres.

The metal bits at the top of the tray - which are very small - split a single fibre into 16 fibres.

Up until now, all of this is passive. It can get flooded (the AFFPs are weather proofed), it doesn't matter - unlike an old copper cabinet which would need replacing.

Step 2 - Exchange to ISP

This reaches the OLT - Optical Line Terminal. Up until now I have a single, split, piece of glass from my house to the exchange. I share this piece of glass with my neighbours - up to, let's say, 16 of them (1:16 split). This arrives to a single port in the exchange. The port is setup to handle all of our ONTs with different time-based setups for the upstream, so I get 125 microseconds, my neighbour gets the following 125 microseconds etc. This is also how some shared network media works - you get some time on the line to yourself, and it's divided between all the users.

This single port on an OLT can handle a number of customers (which depends on how it's configured, but Chorus uses 16) and it's core function is to handle both the TDM (time division multiplexing) as well as FDM (frequency division multiplexing) over a single piece of fibre.

TDM is used within a single service for the GPON and XGSPON services. So I share a single frequency with all my neighbours on the same port/fibre line for upstream transmission.

FDM (frequency division multiplexing) breaks it out between difference services - GPON (up to 1G) vs XGSPON (Hyperfibre 2/4/8G) which operates at a different frequency.

Downstream is a broadcast domain, with no constraints or restrictions in the Chorus network.

You can think of normal fibre operating on red light, while Hyperfibre is on green light. The fibre itself can carry both "colours" at the same time without conflict.

The different ONTs (normal vs Hyperfibre) can't see the other colours, so you can use them both at the same time without interference. But everyone on a single Gigabit Fibre port can "see" each others traffic - sort of. The light physically reaches each ONT in the group, but each ONT (and the source OLT port) is setup so that they can only use their specific slice of time. And most likely, there is some encryption in here too, where my ONT and the OLT share a key - so my neighbour can't decrypt my traffic even if they wanted to.

Which is likely another reason why the ONT is not user-configurable.

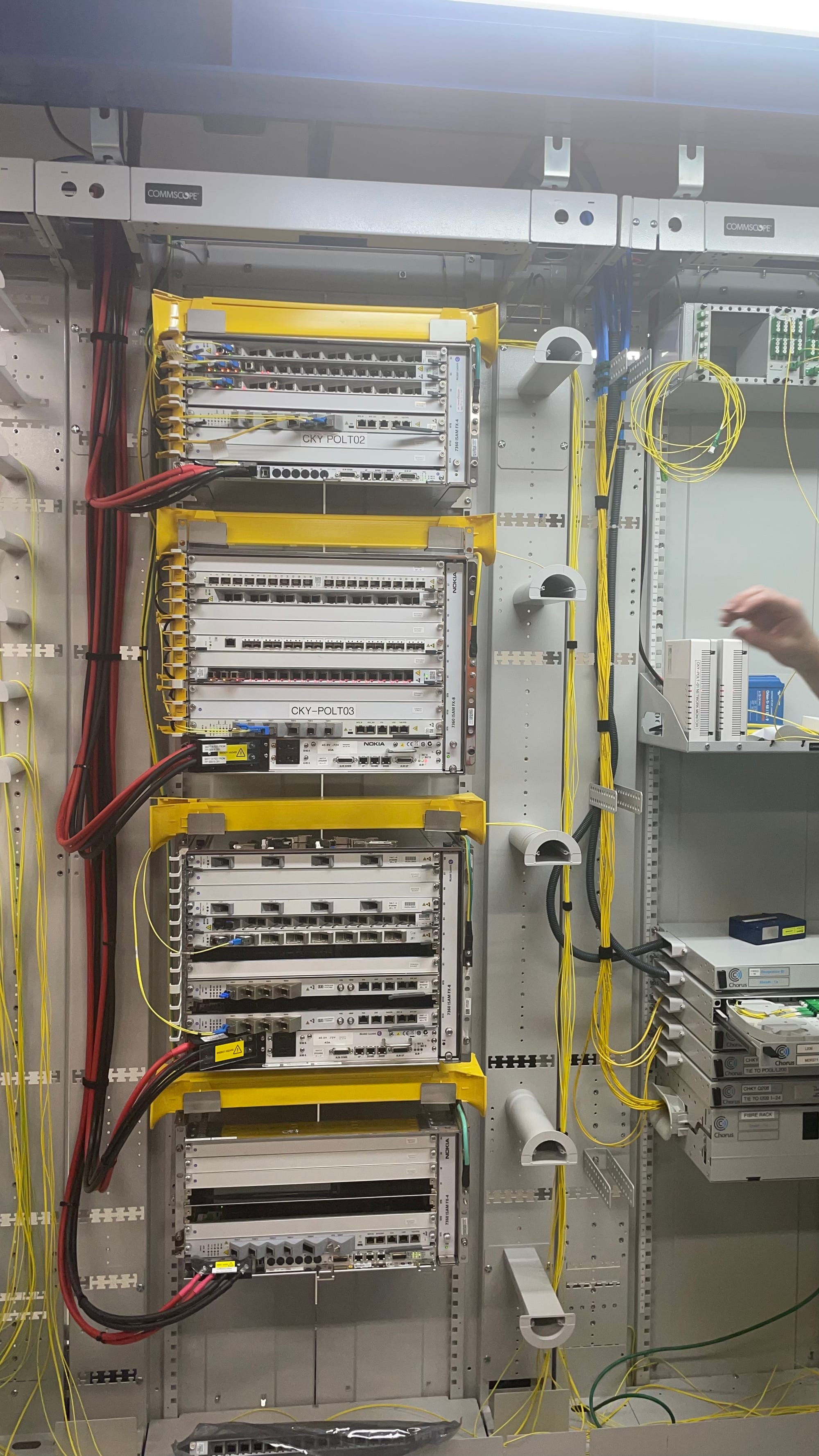

The exchange is where we first see powered, network-looking gear.

"My" port might be in the top left of that top piece of gear. It's a single fibre in, and goes into the rest of the OLT for routing/sending.

In terms of power, each one of these OLTs (there are 3 in this picture) consumes about 2 kW of power, which is a normal tea kettle running at full tilt.

Sounds like a lot, but each one can handle around 2000 customers, so the per-customer power usage is about 1 W, which is, to be honest, bugger all. 1/8th of a fairly dim LED lightbulb.

Now this is where I get a bit fuzzy - and I might need some clarity from Brent or Bobby. But from here, it's layer 2 packets - think Ethernet - up to the BNG (Border Network Gateway), so the OLT is configured so that customer on WH-POLT02:1-1-1-6-9 port (similar to mine, but not mine) has all its packets sent over to the QUIC BNG port (which might be QUIC-BNG:1 in network port speak), which is in... an exchange in Auckland.

The actual routing of that is... well, it wasn't explained. But I'm guessing it's a fairly normal packet switching network - I want to get a packet from port A to port B, this is the route I take, same as ethernet would over your local network if you have multiple switches and segments.

From the IP point of view, my next hop is the QUIC BNG. All of this so far has happened below layer 3 (IP). If you want to think smaller, the Chorus network is a big ethernet, like you have in your house, with many interconnected switches, and this is moving packets and data from machine to machine. Except on a regional scale, and with a lot more control over how data moves.

This means that QUIC - and every other ISP - doesn't need to have gear in every single exchange, and Chorus doesn't have to host 100s of rack units of ISPs hardware. It's elegant, and knowing how it works, I don't begrudge Chorus (and the other WSPs) their monthly fee. We have it pretty good here - the model might not be perfect, but it could be a whole lot worse.

So in summary, the flow:

- Your router

- Your ONT

- A 16:1 split back to the exchange

- Various bits of the Chorus network, ending in a Ethernet handover point for your ISP

- Your ISPs BNG

- 'teh Interntz

How big IS the network?

Ah yes, the big question. How much can this network handle?

The answer is ... well... more than we use right now. Or more than when Fortnight release a big update.

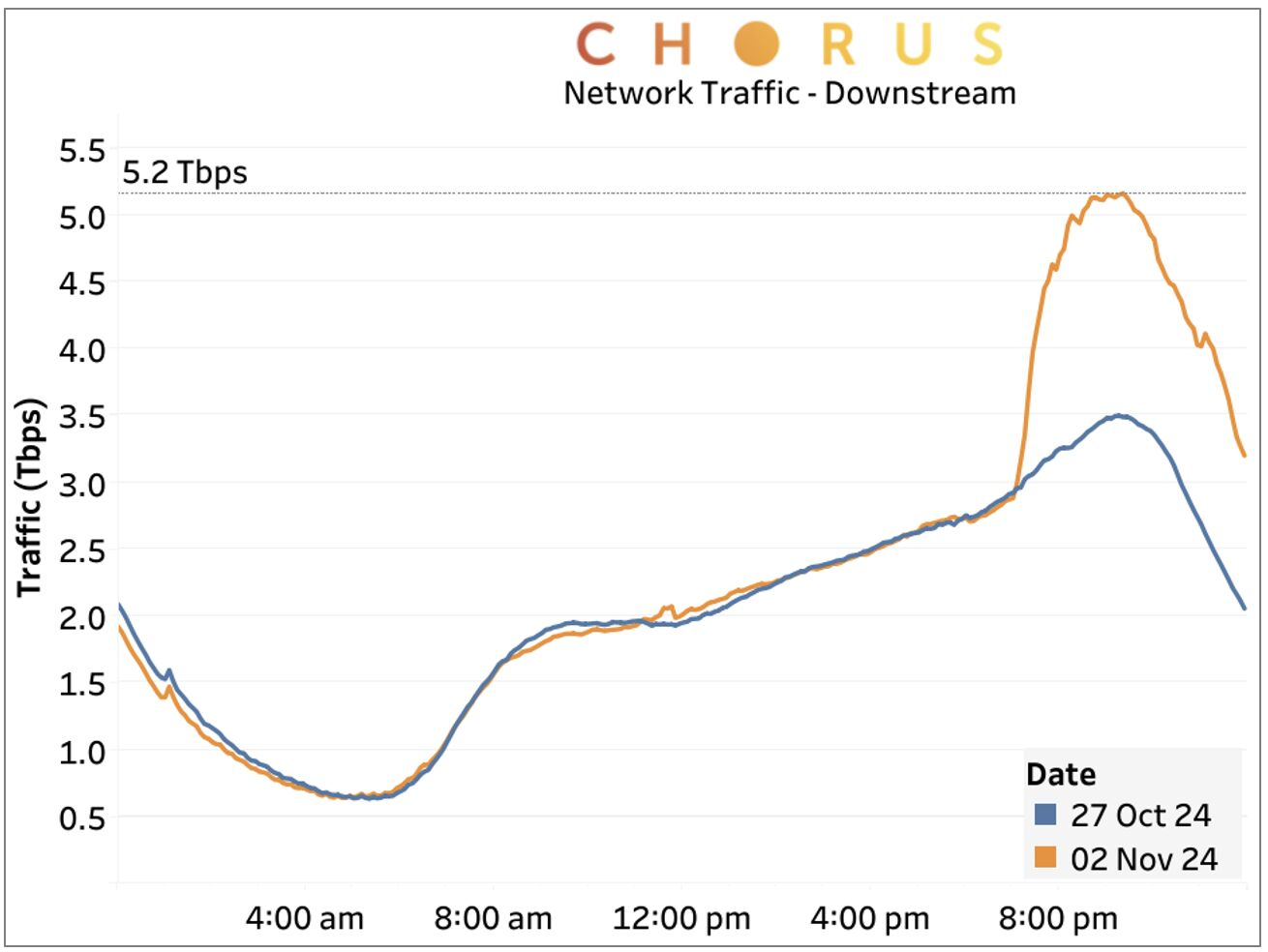

On a normal day, the network hits about 3.5 terabits per second (Tbps) at around 8 or 10pm. When Fortnight released their latest patch, it peaked at 5.2 Tbps, which is a new record. For comparison, when we were all at home during the first COVID lockdown in 2020, the peak was around 2 Tbps.

When asked, Chorus can't tell us what the network capacity is, because its basically a distributed network. The path from Waiheke is monitored, but if it happens to be overloaded, it doesn't affect the path from Mt Eden. So an overall network "capacity" isn't a meaningful metric - only network congestion (or how close it is to congestion) on a given link. And thats being monitored 24/7.

But the current peak of 5.2 Tbps is not even close, I'm told. There's a lot of life in this (not at all) old girl yet.

For comparison, New Zealand's major external link - Souther Cross Cable - has a lit capacity of 92 Tbps, and the Hawaiki cable is 120 Tbps. SCC was only expected to have 20 Tbps in 2020, but the technology at each end keeps getting upgraded which adds more capacity without having to touch the actual cable. Chorus and the other WSPs could do the same locally - hence why 5 Tbps is likely not even touching the sides of the physical capacity of the network. They might have to upgrade the optics at each end (the ONT and the ports in the OLT) but the cables have a huge capacity available with plenty of built in redundancy.

Network traffic trends

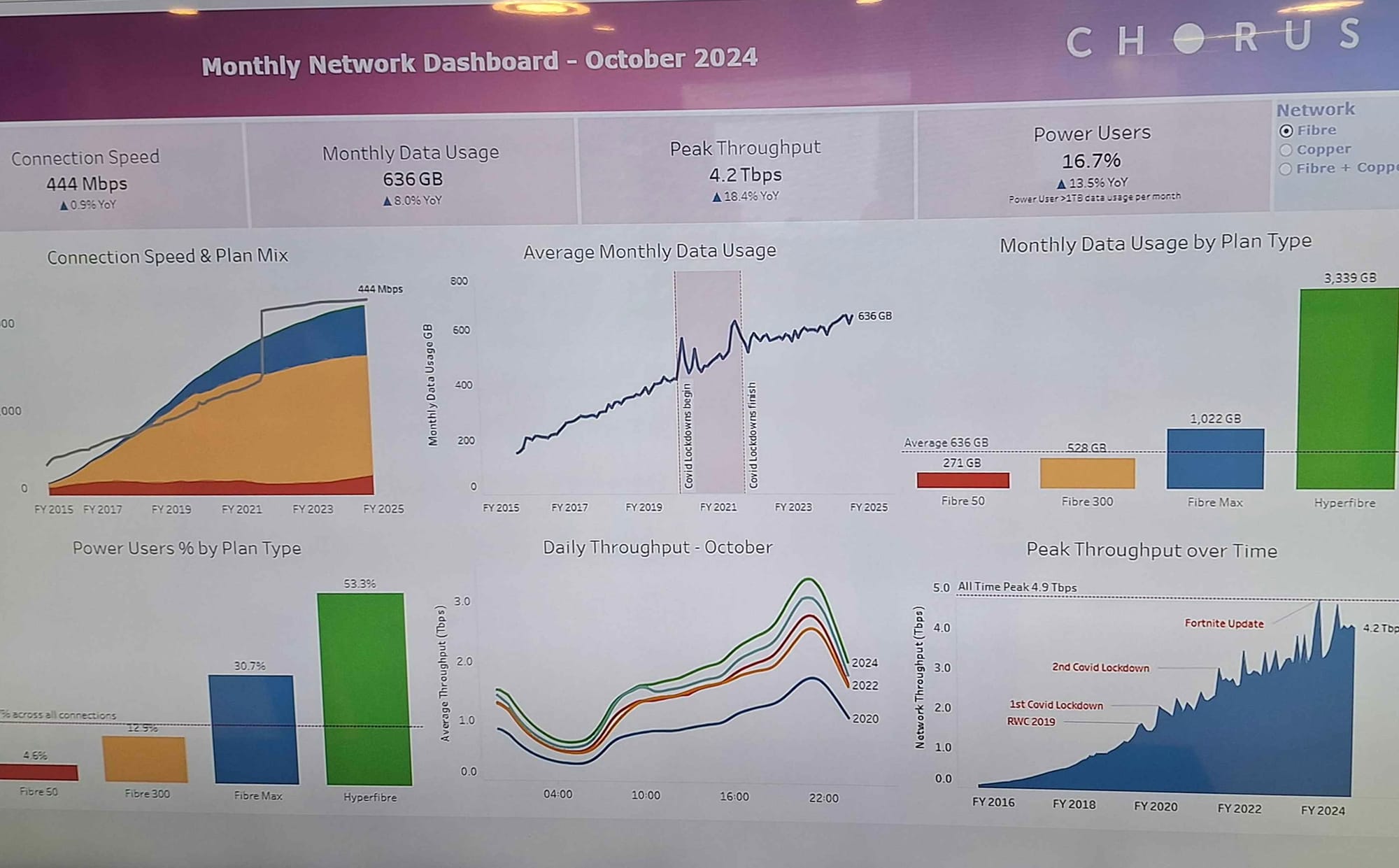

One of the TVs had a big graph showing some network-wide stats, most going from the start of the rollout to now

Some of the takeaways:

- The average connection speed on the network is about 444 Mbps, so given that plans are 50, 300 and 950, it seems a lot more households, on average, have gigabit than other plans. Very few have Hyperfibre, and the majority are on 300/100.

- Monthly usage rises with plan speed, but is generally flattening out

- 10 Mbps ADSL used about 10 GB (politicians: why do we need to roll out fibre then!?)

- 50 Mbps is using around 270 GB; 300 Mbps uses around 530; 950 Mbps uses about 1 TB and Hyperfibre hits around 3.3 TB a month.

- The peaks of the second COVID lockdown were normal throughput about 18 months later, but the over-investment for the Rugby World Cup (2019) meant that the network was more than prepared for the first lockdown.

All up, it was a great tour, and lovely to geek out with a bunch of like-minded folks.

Update with minor amendments following technical clarification from Chorus.

Nic Wise is a Waiheke-based software developer. He blogs about tech and other topics on Fastchicken.co.nz.

3 Comments

Great article. Thanks for sharing.

Great read.

But I don't think complexity is part of our future.

Funny, when they were putting it past our gate (en route to a city) I asked if I could tap in - they looked at me funny when I mentioned hacksaws.... But the joke is it goes past my gate and I don't have it.

There are two fibre cables running past my place but both are private. Chorus offers me 256k copper. Like PDK chorus are selective who they serve. I use mobile data but that's capped at 1.2meg. Rural NZ is short changed when others get redundancy after redundancy . I dont use Spark or Chorus.

We welcome your comments below. If you are not already registered, please register to comment

Remember we welcome robust, respectful and insightful debate. We don't welcome abusive or defamatory comments and will de-register those repeatedly making such comments. Our current comment policy is here.