The spectacular success of large language models such as ChatGPT has helped fuel this growth in energy demand. At 2.9 watt-hours per ChatGPT request, AI queries require about 10 times the electricity of traditional Google queries, according to the Electric Power Research Institute, a nonprofit research firm. Emerging AI capabilities such as audio and video generation are likely to add to this energy demand.

The energy needs of AI are shifting the calculus of energy companies. They’re now exploring previously untenable options, such as restarting a nuclear reactor at the Three Mile Island power plant that has been dormant since the infamous disaster in 1979.

Data centres have had continuous growth for decades, but the magnitude of growth in the still-young era of large language models has been exceptional. AI requires a lot more computational and data storage resources than the pre-AI rate of data centre growth could provide.

AI and the grid

Thanks to AI, the electrical grid – in many places already near its capacity or prone to stability challenges – is experiencing more pressure than before. There is also a substantial lag between computing growth and grid growth. Data centres take one to two years to build, while adding new power to the grid requires over four years.

As a recent report from the Electric Power Research Institute lays out, just 15 states contain 80% of the data centres in the US. Some states – such as Virginia, home to Data Centre Alley – astonishingly have over 25% of their electricity consumed by data centres. There are similar trends of clustered data centre growth in other parts of the world. For example, Ireland has become a data centre nation.

AI is having a big impact on the electrical grid and, potentially, the climate.

Along with the need to add more power generation to sustain this growth, nearly all countries have decarbonisation goals. This means they are striving to integrate more renewable energy sources into the grid. Renewables such as wind and solar are intermittent: The wind doesn’t always blow and the sun doesn’t always shine. The dearth of cheap, green and scalable energy storage means the grid faces an even bigger problem matching supply with demand.

Additional challenges to data centre growth include increasing use of water cooling for efficiency, which strains limited fresh water sources. As a result, some communities are pushing back against new data centre investments.

Better tech

There are several ways the industry is addressing this energy crisis. First, computing hardware has become substantially more energy efficient over the years in terms of the operations executed per watt consumed. Data centres’ power use efficiency, a metric that shows the ratio of power consumed for computing versus for cooling and other infrastructure, has been reduced to 1.5 on average, and even to an impressive 1.2 in advanced facilities. New data centres have more efficient cooling by using water cooling and external cool air when it’s available.

Unfortunately, efficiency alone is not going to solve the sustainability problem. In fact, Jevons paradox points to how efficiency may result in an increase of energy consumption in the longer run. In addition, hardware efficiency gains have slowed down substantially, as the industry has hit the limits of chip technology scaling.

To continue improving efficiency, researchers are designing specialised hardware such as accelerators, new integration technologies such as 3D chips, and new chip cooling techniques.

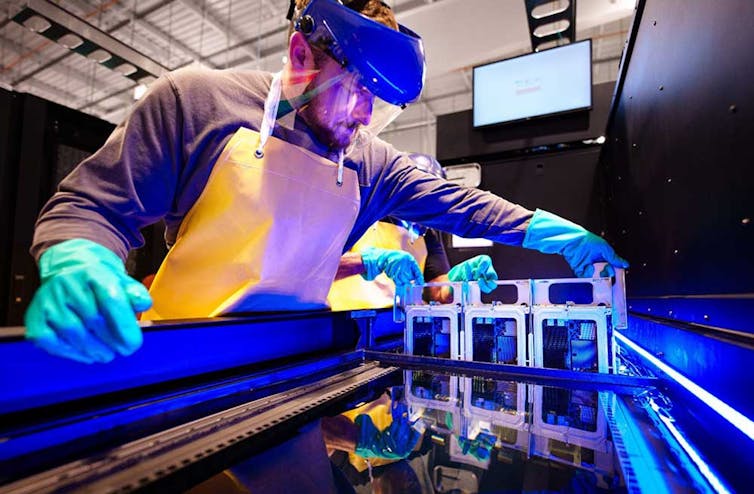

Similarly, researchers are increasingly studying and developing data centre cooling technologies. The Electric Power Research Institute report endorses new cooling methods, such as air-assisted liquid cooling and immersion cooling. While liquid cooling has already made its way into data centers, only a few new data centres have implemented the still-in-development immersion cooling.

Running computer servers in a liquid – rather than in air – could be a more efficient way to cool them. Craig Fritz, Sandia National Laboratories

Flexible future

A new way of building AI data centres is flexible computing, where the key idea is to compute more when electricity is cheaper, more available and greener, and less when it’s more expensive, scarce and polluting.

Data centre operators can convert their facilities to be a flexible load on the grid. Academia and industry have provided early examples of data centre demand response, where data centres regulate their power depending on power grid needs. For example, they can schedule certain computing tasks for off-peak hours.

Implementing broader and larger scale flexibility in power consumption requires innovation in hardware, software and grid-data center coordination. Especially for AI, there is much room to develop new strategies to tune data centres’ computational loads and therefore energy consumption. For example, data centres can scale back accuracy to reduce workloads when training AI models.

Realising this vision requires better modeling and forecasting. Data centres can try to better understand and predict their loads and conditions. It’s also important to predict the grid load and growth.

The Electric Power Research Institute’s load forecasting initiative involves activities to help with grid planning and operations. Comprehensive monitoring and intelligent analytics – possibly relying on AI – for both data centres and the grid are essential for accurate forecasting.

On the edge

The US is at a critical juncture with the explosive growth of AI. It is immensely difficult to integrate hundreds of megawatts of electricity demand into already strained grids. It might be time to rethink how the industry builds data centers.

One possibility is to sustainably build more edge data centres – smaller, widely distributed facilities – to bring computing to local communities. Edge data centres can also reliably add computing power to dense, urban regions without further stressing the grid. While these smaller centres currently make up 10% of data centres in the US, analysts project the market for smaller-scale edge data centres to grow by over 20% in the next five years.

Along with converting data centres into flexible and controllable loads, innovating in the edge data centre space may make AI’s energy demands much more sustainable.![]()

Ayse Coskun, Professor of Electrical and Computer Engineering, Boston University This article is republished from The Conversation under a Creative Commons license. Read the original article.

We welcome your comments below. If you are not already registered, please register to comment

Remember we welcome robust, respectful and insightful debate. We don't welcome abusive or defamatory comments and will de-register those repeatedly making such comments. Our current comment policy is here.